Contents

Literature review

Introduction

Data quality

1. Data definition

2. quality definition

3. Data quality

4. Importance of data quality

4.1. data quality importance in healthcare

Data quality and usability in healthcare

5. Data quality dimensions

4.1. data quality frameworks

4.2. define data quality dimension

6. Metadata

7. Metadata quality

System architecture:

Literature review

Introduction

This part presents a brief review of the literature related to data quality and importance of it, different data quality dimensions and frameworks and metadata quality.

Data quality

1. Data definition

Data is the central concept of data quality and understanding the meaning of that is important. According to Hicks “Data is A representation of facts, concepts or instructions in a formalized manner suitable for communication, interpretation, or processing by humans or by automatic means.” (Hicks,1993). We can explain the concept of data from three points of view: objective, subjective and intersubjective and each of them emphasis on different possible roles of data. Here we present a brief explanation of these views:

1. Objective view of data

This kind of data is factual and is resulted from the measurable objects or events record. It contains details of individual objects and events that are produced in enormous scale in our modern society. This view of data tends to assume that all process of data will be automated. There are some definitions that different scientists present about objective data:

“Data represent unstructured facts.” (Avison & Fitzgerald, 1995)

“By themselves, data are meaningless; they must be changed into a usable form and placed in a context to have value. Data becomes information when they are transformed to communicate meaning or knowledge, ideas or conclusions.” (Senn [1982: 62] quoted by Introna [1992])

2. Subjective view of data

The subjective view is very different from the previous type. This kind of data is not necessarily present correct, true and accurate of a particular fact.

There are some definitions that different scientists present about subjective data:

Maddison defines data as “facts given, from which others may be deduced, inferred. Info. Processing and computer science: signs or symbols, especially for transmission in communication systems and for processing in computer systems, usually but not always representing information, agreed facts or assumed knowledge; and represented using agreed characters, codes, syntax and structure”

(Maddison [1989: 168] quoted by Checkland and Holwell [1998])

Senn defines subjective data as Example definitions:

“Data: Facts, concepts or derivatives in a form that can be communicated and interpreted.” (Galland, 1982)

“Data are formalized representations of information, making it possible to process or communicate that information.” [Dahlbom & Mathiassen, 1995: 26]

3. Intersubjective view of data

in this view establishing a communication is the main purpose and data can be processed and provided directly by the person or by computer. Also, information can be retrieved from this kind of data.

This kind of data depends on types of database records are saved in formalized structured, so it uses accepted codes, structures, syntax, characters and way of coding and decoding. a series of bits are data if we have a key to decode it, for instance, text data structure is based on syntax and semantics of a language.

because this kind of data is recordable and has predesigned structure are suitable for further processing and interpretations and we can conclude more information from them, so they are useful potentially

“Data: The raw material of organizational life; it consists of disconnected numbers, words, symbols, and syllables relating to the events and processes of the business.” (Martin & Powell 1992)

2. quality definition

Much like data, the meaning and concept of quality can be present in different ways by different sciences. Edwards Deming was one of the first quality proponents that is famous because of his works in the industrial renovation in Japan after World war II. He claimed that productivity improvements that create a competitive position in the industry are results of quality improvements (Deming, 1982). He emphasized that low quality will wastes production capacity and effort and is causes of cost increases and rework. In his view, the most important section of the production line is the customer (Deming, 1982).

he strongly believes that “the cost to replace a defective item on the assembly line is fairly easy to estimate, but the cost of a defective unit that goes out to a customer defies measure” (Deming, 1982).

Demining prize is established by the Japanese Union of Scientists and Engineers in 1951 to illustrate the certain level of quality achievement in organizations (Mahoney & Thor, 1994).

In 1988, Juran contributed to study about quality and proposed that quality means fitness of use (Juran, 1988). He emphasized the role of customers in quality definition and measurement. Juran believes that customers are all people who are impacted by our products and our process are customers and he provides some definition about various kind of customers like internal and external customers (Juran, 1988). He believes that three reasons make a force for the organization to pay attention to the quality issue: decrease in sales rate, poor quality costs and society treatments. He said that all of the organizations must do three things: quality planning, quality improvement and, quality control (Juran, 1988).

The third person that works on the quality subjects is Crosby. He continued his colleague’s idea about the importance of customers and said “the only absolutely essential management characteristic of the twenty-first century] will be to acquire the ability to run an organization that deliberately gives its customers exactly what they have been led to expect and does it with pleasant efficiency” (Crosby,1996).

He states that define quality is difficult because everyone has a different definition of it and thinks that others have this kind of definition about quality (Crosby,1996).Another special effort about improvement in quality concepts performed by US Congress in 1987 that established the Malcolm Baldridge National Quality Award. This award evaluates each business on seven major criteria and is focused on customer satisfaction and preventative behavior rather than passive approach to quality management (Mahoney & Thor, 1994).

Another attempt in this area is development some international standards like ISO 9000 series. This kind of standards are focused on capabilities of organizations in regard to quality management.

How does quality as discussed in this section relate to data quality? Can you introduce the relevance of quality in management and organizations to your literature review and research question?

3. Data quality

data quality has become a very important subject of study in information systems area and a vital issue of modern organizations. During last decades, the number of data warehouses and databases have incredible growth and we are faced with a huge amount of data. it’s obvious that decision about future activities of organizations is related to stored data, so the quality of data is very important (WHO,2003).

We often define the term of “data quality” or “information quality” as “fitness of use” of information (Wang & Strong, 1996).

Good quality data means that information is complete, permanent, and accurate and based on standards With Improve information of the business to reduce costs, improve productivity and accelerate service.

The data that is economical, rapidly to create and evaluate decisions and effective has quality. We know that data quality is a multidimensional concept and contains some factors like relevancy, accessibility, accuracy, timeliness, documentation, user expectation and capability. References?

However, the ways that we collect data and create a set of them or the amount of data that we gather in our datasets influence on some characteristics like cost, accuracy or user satisfaction, so good or bad data quality has more impact on our results than results that will be shown in statistical analysis.

for instance, some scientists claim that terrorist attacks on September 11, 2001, was the fault of US government of collect accurate and relevant data in federal databases and maybe they could prevent this attacks with correct data and correct analysis.

We have two kinds of decisions: some of them are based on special data and some others are about the data. Both kinds of them making the cost for us, so it would be good to know about:

- how much is the cost of achieving a special level of data quality

- after improving data quality, what are the financial profit in an organization

- what are the impacts and costs of poor data quality

however, data quality subjects are new, but researchers deployed some frameworks about it with data quality criteria, factors, dimensions and the ways that we can assess and measure some of these factors in organizations and recently international standards organization developed some frameworks and definition about this subject

(ISO/IEC, 2008).

Over the past decade, research activities in the data quality filed for measures and improving the quality of information have increased dramatically to achieve goals (2).

3. Importance of data quality

data quality has significant implications. on January 28, 1986, seven astronauts killed in shuttle explosion some second after lift-off.

This event happened again around seventeen years later and a shuttle that has called Columbia broke apart in space and again seven astronauts killed. in 1988, the U.S. Navy Cruiser shot down 290 passengers of an Iranian commercial airplane.

on 11 September 2011, nineteen hijackers passed airport security and killed around 3000 passengers of four commercial airplane.

These are some events that were horrible and many people lose their life and in consequence make some international problems and war between countries, but all of the events have something in common. according to the investigation of some commissions, we can find at least two main common things in these events; firstly, in all of them the results were against of organization aim and objective and secondly, in all of them inadequate data and poor data quality were the obvious major of accidents. (9/11 Commission, 2004; Columbia Accident Investigation Board, 2003)

For instance, the Challenger accident was investigated by The Rogers Commission, they figure out that the decision to launch “was based on incomplete and sometimes misleading information” (Rogers Commission, 1986).

According to an investigation of Fisher and Kingma about Vincennes accident in 2001, “data quality was a major factor in the USS Vincennes decision-making process” (Fisher& Kingma, 2001). A commission about 11 September event in the US, found out that “relevant information from the National Security Agency and the CIA often failed to make its way to criminal investigators” (Fisher& Kingma, 2001).

about Columbia accident investigation results showed that “the information available about the foam impact during the mission was adequate” [n], yet also noted that “if Program managers had understood the threat that the . . . foam strike posed . . . a rescue would have been conceivable” (Fisher& Kingma, 2001).

We can’t claim that in all of these events insufficient data quality is the main cause of happening but “it still remains difficult to believe that proper decisions could be made with so many examples of poor data quality” (Fisher& Kingma, 2001).

We can learn many things from mentioned accidents and they indicate the importance of data quality, but they are not a kind of normal events. in contrast, there are many typical examples about poor data quality around us. one example is nurse of a hospital that misplaced a decimal point and didn’t understand the problem and caused a

Paediatric patient overdose (Belkin, 2004), another one is an eyewear company that loses at least 1 million dollars each year because of fifteen percent lens-grinding rework rate cost. (wang et la.,1998), or a healthcare center that paid over four million dollars to patients that weren’t eligible for profits anymore (Katz-Haas & Lee, 2005).

Some organizations that find out the significance of data quality can expand their data resources to solve their billing differences (Redman, 1995), or create some benefits of accurate and complete data (Campbell et la., 2004), or rise satisfaction of customers (McKinney et la., 2002).

Although overall cost of business problems and losses estimation because of poor data quality is variable in the different businesses, its more than one billion dollars each year and this cost contains human lives costs, permanent and continues changes (Fisher& Kingma, 2001).

3.1. data quality importance in healthcare

There are two important reasons that make Data quality a critical subject in healthcare area in recent years, firstly it causes increase and development in patient care standards and procedures and secondly, its effect on government investments for the preservation of health services for people.

All parts of health care, as well as faraway aid stations and clinics, hospitals and health centers, health departments and ministries, have to be worried about poor data quality in health care and effects of it on results and outputs of this section.

In most of the countries, administrators are confused by poor health and medical record documentation, confliction in health codes, large amount medical records, and data

that are waiting to be coded as well as poor utilization and access to soundness data (WHO,2003).

The significance of data quality in health care can be shown as follows:

- patient care: provide impressive, adequate and right care and decrease health hazards.

- Up-to-date patients

- efficient administrative and clinical operations, like make effective communication with patients and their families

- planning strategic schedule

- monitoring the health of society

- decrease health hazards (for wrong patient or treatment)

- help for feature investments in healthcare (Moghadasi, 2005).

one of the most substantial dimensions of data quality is usability that makes a product simple to use and pleasant.

Data quality and usability in healthcare

Kahn and his colleagues have introduced some dimensions such as accessibility, believability, ease of manipulation and reputation as factors of usability (Kahn et la, 2003).

usability of data and information that are provided in healthcare systems are the principal issue for practitioners. in addition, it is an essential factor for final users and patient acceptance of the technology (Rianne and Boven, 2013). we can define usability as a capability of systems that able users to perform their tasks calmly, pleasantly, efficiently and effectively (Preece et la., 2002), While the inadequate amount of usability that is related to administration and installation of information systems have a direct impact on user satisfaction (hassan shah, 2009).

Therefore, today usability is recognized as the main factor of interactive healthcare systems and evaluation of it has become more important during last years.

a system with high usability has some benefits such as help and support users, decrease user’s faults, increase acceptance rate of the system by users, increase efficiency (Maguire, 2001).

A description about usability is provided by Jakob Nielsen, who is very famous in usability research subjects, “a quality attribute that assesses how easy user interfaces are to use” (Nielsen, 1993) He described that “the word ‘usability’ also refers to methods for improving ease-of-use during the design process”, also he said that usability has five essential components:

- Learnability: this concept refers to the rate of user’s confident sense when they view easy to design for the first time for doing their tasks.

- Efficiency: This concept refers to the speed of doing tasks again after users have learned the design and used it.

- Memorability: this concept refers to how easily users can establish gained skills again When they use the specific design after a while.

- Errors: This factor refers to the number of user’s faults, the intense rate of these errors and effort rate to solve the errors

- Satisfaction: This factor shows a number of users that are happy and calm by using special design.

ISO 9126 in 1991 offered a good definition for quality in software engineering field and classified usability as a quality component and describes that: “Usability is the capability of the software product to be understood, learned, used and attractive to the user when used under specified conditions” (ISO, 1991).

increase the acceptance rate of a system by users is an indirect result of system design. During last decade, we had a toward trend to create and develop systematic systems with a high rate of usability. Now, the main goal of designers is developing a usable and useful system (Hartson, 1998). they believe that people are the main purpose of the designing system so it must be usable for them. results of some studies have been shown that if functions of our system work correctly but it can’t gain the user’s expectations, the system will not be used in future (Bevan,1995).

information system’s users can be important participants in the designing process because they have valuable experience of work with systems. human-centered interface design methods emphasize on contribute users as a main part of the designing process. in this kind of methods, users can help designers to consider users’ needs at the time of system designing, verifying ways of designing a system, performing the tests of usability during system development (Sadoghi et la. , 2012).

Reference:[x]

where is this discussed in the lit review?

4. Data quality dimensions

Generally, data quality is a multidimensional concept and shows different aspect and characteristics of data [y].

There isn’t any special agreement about factors and dimensions of data quality. Price and Shanks in 2005 defined that there are four different research approaches to describe data quality: empirical, practitioner, theoretical, or literature-based.

- Empirical approaches: these ways are based on the feedback of customers to identify quality criteria and factors and rank them into different categories like research of Kahn et al. (2002) or Wang & Strong (1996)

- Practitioner-based approaches: these kinds are emphasized on the experience of industrial and ad-hoc observation. Some researchers claim that this approach doesn’t have enough rigor. English in 1999 deployed this approach to develop a model.

- Theoretical approaches: these ways are created by information economics and theory of communication. Some researchers believe that this approach has defective in the relationship between concepts.

- Literature-based approaches: in these ways for deriving data quality criteria, literature review and different kind of analysis will use.

Maybe we can define the fifth approach such as a designed-oriented approach that indicates predesign use of data and information [z]. Therefore, this approach against other ones helps system designers to understand verity of different system stakeholders and provide a real guidance for them to realize data deficits by mapping state of an information system on the state of the real world [aa].

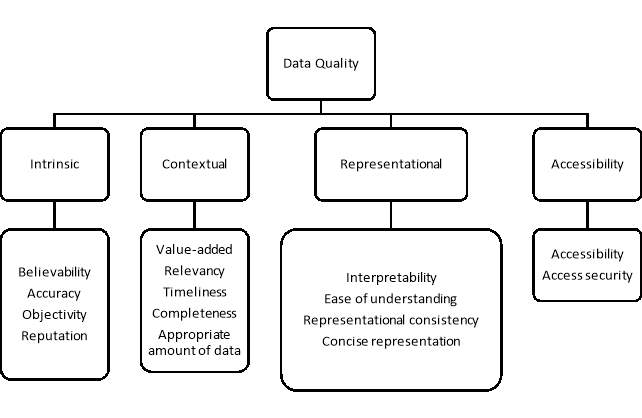

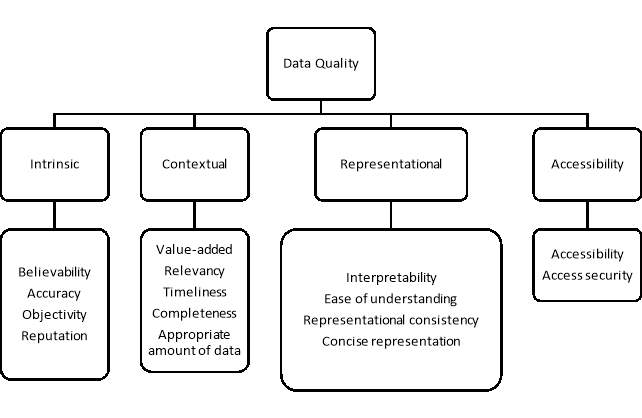

4.1. data quality frameworks

There are many data quality frameworks and dimensions categorize that are defined by different data scientists. In this section, we will introduce some of them:

In 1974, Gallagher presents one of the first framework of data quality.

He considered usefulness, desirability, meaningfulness, and relevance, among others, in determining the value of information systems as factors of data quality His framework (Gallagher, 1974).

A few years later Halloran and his colleagues in 1978 determined accuracy, relevance, completeness, recoverability, access security, and timeliness as data quality factors. Also, he specified metrics for each of these in terms of the overall system. They state that “an organization can keep error statistics relating to data accuracy”.

Relevance in their term described as “how [the system’s] inputs, operations, and outputs fit in with the current needs of the people and the goals it supports” (Halloran,1978).

Another framework introduced in 1996 by Zeist and Hendricks. Their model is an ISO model extension. They ranked data quality factors into Functionality, Reliability, Efficiency, Usability, Maintainability and Portability dimensions.

Although all of the previous frameworks indicate some aspect of data quality, Wang, and Strong in 1996 developed a framework that is a base of many of today’s researches (Wang &Strong, 1996).

they indicate factors of data quality that are demanded by consumers of data. Also, they state that “although firms are improving data quality with practical approaches and tools, their improvement efforts tend to focus narrowly on accuracy”. start of their research was an investigation about data quality factors and gatherers 200 items, then decrease the list according to some analyses and finally introduce 15 dimensions in four categories:

- Intrinsic DQ explains that data in their own right has quality

- Contextual quality emphasized the requirement that data must be considered based on the current task.

- Representational DQ and accessibility DQ indicate the significant role of systems.

This framework indicates that high data quality must be contextually suitable for our task, clearly represented, accessible to the data consumer and intrinsically well-defined.

In 1997, strong and his colleagues used this framework in three different organizations to find out their data quality issues and suggest some solutions. They found out that schema of data quality problems can move between distinct groups. For example, a problem that is related to incompatible data representation can be discovered as an accessibility issue. Therefore, they state that “two different approaches to

Problem resolution: changing the systems or changing the production process” (Strong, Lee, wang, 1997). They strongly emphasized that for solving data quality problems, its need to have the view and perspective beyond the limitations of intrinsic quality dimensions.

Figure 5. Data quality as a multi-dimensional construct.

Note. Adapted from Wang, R. Y., and Strong, D. M. (1996).Beyond accuracy: What

quality means to data consumers. Journal of Management Information Systems, 12(4), 5-

34.

Two other researchers, Shanks and Corbitt in 1999 proposed a Semiotic-based framework for information quality. Their factors are Well defined / formal syntax, comprehensive, unambiguous, meaningful, correct, timely, concise, easily accessed, reputable, understood, awareness of bias (Shanks & Corbitt, 1999).

TABLE IV. IQ DIMENSIONS BASED ON SEMIOTIC AND QUALITY ASPECTS [13]